The total time required to transfer large files over the internet can take ages. It gets even worse if your recipient is halfway across the globe. While many organizations transfer files through popular transmission control protocol (TCP)-based methods like file transfer protocol (FTP), secure file transfer protocol (SFTP) and hypertext transfer protocol (HTTP), you can actually achieve shorter transfer times with the user datagram protocol (UDP). So, just how much faster can UDP be?

UDP file transfers can be up to 100x faster than traditional TCP methods like FTP and SFTP, making them ideal for large file transfers across long distances. However, UDP’s lack of built-in reliability can be a drawback. JSCAPE by Redwood’s hybrid accelerated file transfer protocol (AFTP) combines the speed of UDP with the reliability of TCP to transform your large data transfers.

Comparison tests we conducted pitting FTP vs. a UDP-based protocol showed that UDP can be 100x faster.

If you prefer a more in-depth discussion on this topic, read our whitepaper: How to boost file transfer speeds 100x without increasing your bandwidth.

Sample case studies for high-speed UDP file transfers

Many organizations want faster file transfers due to their operational needs, especially for extremely large files like videos, big data and more.

Case study: Media production company

A global media production company frequently transfers terabytes of large video files between editing and post-production teams in Los Angeles, London and Tokyo. Using TCP-based protocols like FTP led to significant delays, impacting project timelines.

By switching to a hybrid approach incorporating UDP, the company reduced its transfer times by more than 80%. This led to timely content delivery and more efficient collaboration among the company’s geographically dispersed teams.

You can experience similar results as JSCAPE offers AFTP, a TCP-UDP hybrid to enable fast and reliable file transfers for large files. See AFTP in action with a free demo.

Case study: Research institution

A research institution needed to transfer terabytes of data generated from a particle accelerator experiment to a remote supercomputing center for analysis. They were on a tight schedule, so researchers wanted a solution that could significantly reduce the transfer time, as large files can take several days to arrive. By switching from a purely TCP-based protocol to one that incorporated UDP, they were able to reduce the transfer time from days to mere hours.

What is TCP?

TCP is a fundamental internet protocol that enables reliable, ordered and error-checked delivery of data between applications on a network. It’s a connection-oriented protocol, which means it establishes a connection between communicating devices before exchanging data. Modern cloud storage solutions also often utilize TCP for data transfer and communication between clients and servers. Due to its additional features, TCP generally requires more CPU processing power than UDP. However, TCP still ensures data integrity and proper packet sequencing, which is why it’s crucial for many internet applications.

Why organizations keep using TCP to transfer files

Despite TCP’s clear disadvantage when it comes to speed, most popular file transfer tools still rely on TCP-based protocols like FTP, SFTP and HTTP. TCP’s popularity as a file transfer method is largely due to its reliability. TCP provides mechanisms that ensure individual packets reach their destination intact and in the right order.

To establish correct ordering, the protocol assigns sequence numbers to each TCP packet. Applications that transfer files via TCP then look up these sequence numbers in each TCP packet’s header to determine the correct order.

Although TCP packets can certainly get lost along the way, the protocol allows sending parties to detect packet loss and to retransmit lost packets. So, how does this mechanism work? When you send a TCP packet to another party, that receiving party must respond with an acknowledgement (ACK), which is a key part of the TCP.

By requiring an ACK, TCP enables the sending host to determine whether the packet it sent arrived at its destination and whether it needs to retransmit the packet. If no ACK is received, it means the transmitted packet might have been lost along the way, thereby requiring a retransmission.

Thus, when you send a file through TCP, you can be sure your recipient will receive the file exactly the way you sent it. This reliability component is crucial when you’re sending business documents, spreadsheets, health records, financial records and other important files.

In addition to its reliability mechanisms, TCP also employs congestion control and flow control algorithms that are supposed to prevent network bottlenecks and flow-related issues. While these mechanisms and algorithms work as intended, they ironically also cause delays in networks that suffer from high latency.

What makes TCP data transfers slow?

High latency refers to an undesirable network quality characterized by delays due to properties in the network medium, internal processes in intermediary network devices and the distance between two communicating hosts. While you can sometimes address issues involving the network medium and internal processes in network devices, there’s nothing you can do with the distance between two hosts.

For instance, if you’re sending a file from, say, Tokyo to New York, you can’t do anything to reduce the distance between those two cities. Or if you’re sending files between a satellite in space and a ground station, you can’t do anything to reduce the distance between those two points. Since the speed at which a signal can travel across any medium has an upper limit, longer distances will always take a longer time to traverse.

Thus, hosts separated by long distances will always suffer from high latency, which, as stated earlier, translates to delays. Even with a high-bandwidth internet connection, your throughput will still suffer from the effects of high latency. Although latency exists regardless of whether you’re using TCP or UDP, the effect of latency is aggravated by properties only found in TCP.

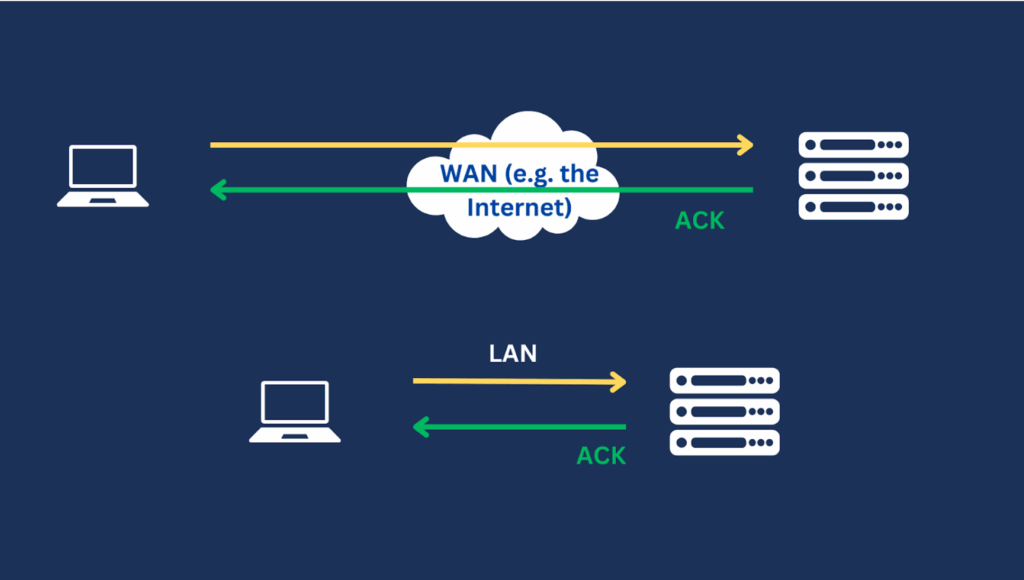

For instance, transmitted TCP packets have to traverse a longer distance in a wide area network (WAN) than in a local area network (LAN), and their corresponding ACK packets have to traverse that longer distance as well.

Since a TCP-based sender has to wait for certain ACK packets to arrive before sending out additional packets, longer distances can result in longer waiting times and, consequently, delay succeeding transmissions. But that’s not the only problem.

As part of its congestion control mechanism, TCP transmissions always start slowly. This means that at the start, a TCP sender limits the rate at which it sends out data to prevent the network from getting overwhelmed. This rate is gradually increased based on the value of a variable known as the congestion window (cwnd).

Every time an ACK is received, that value and, in turn, the rate of transmission increase. So, if ACK packets are delayed, the rate increase is also adversely affected. None of these speed-impacting behaviors are present in a UDP file transfer.

What is a UDP file transfer?

As the name suggests, a UDP-based file transfer is conducted over the UDP protocol. UDP datagrams are the fundamental units of data in a UDP dataset. These packets contain the data payload along with header information like source and destination ports, length and checksum. UDP is also a connectionless protocol. That means it doesn’t require a connection to be established before UDP packets can be sent out. In contrast, TCP does require a connection, which constitutes a series of ACKs before TCP packets can be sent out. UDP has no inherent concept of ACKs at all.

Why UDP data transfers are faster

Unlike TCP, UDP doesn’t have any provisions for reliability, congestion control and flow control. For instance, a sending UDP host doesn’t wait for ACKs before sending additional data. Moreover, the host doesn’t pay attention to network conditions or the receiver’s capacity to receive data when sending out packets. As such, the throughput of UDP packets isn’t as impacted by high latency and packet loss as TCP packets are.

Of course, while the absence of reliability, congestion control and flow control capabilities results in faster throughput, it also makes you susceptible to incomplete and error-plagued data transfers. If you’re sending a business document, you wouldn’t want to lose any piece of information. Unfortunately, UDP can’t guarantee that won’t happen.

How UDP is being used:

UDP is primarily used for establishing fast, low-latency connections between applications, particularly in scenarios where occasional data loss is acceptable. It’s connectionless, which means it doesn’t require a handshake or guaranteed delivery. This makes UDP suitable for time-sensitive applications like voice and video communication, online gaming and DNS lookups.

Other ways to use UDP are for:

- Collaboration and exchange for geographically distributed teams

- Content distribution and collection, e.g., software upgrades, source code updates or CDN scenarios

- Continuous sync – near real-time syncing for active-active style HA

- File-based review, approval and quality assurance workflows

- Person-to-person distribution of digital assets

- Supports master-slave basic replication, but also more complex bi-directional sync and mesh scenarios

How to combine UDP and TCP

It can be advantageous to use both TCP and UDP protocols within the same operating system, application or network connection. TCP and UDP are independent protocols, so they can operate concurrently without conflicting, even on the same port. This allows applications to leverage the strengths of both protocols for different purposes. TCP provides a reliable, connection-oriented stream, while UDP offers a connectionless, unreliable datagram service.

Some ways to combine UDP and TCP in your operations are:

- Domain name system (DNS): DNS uses both TCP and UDP. UDP is used for standard name lookups, while TCP is used for larger responses like zone transfers.

- File sharing with speed optimization: UDP can reduce latency by completing initial sharing of small data chunks like file headers, while TCP can ensure the reliable and ordered delivery of large files.

- Hybrid web applications: UDP can be used for short web conversations like HTTP redirections, while TCP can be used to deliver the overall web content.

- Internet of Things (IoT): UDP can send frequent small data packets like sensor readings to devices, while TCP can be used to send critical commands or configuration updates.

- Reliable data with speed: TCP can handle reliable, ordered data transfer, and UDP can be used for speed-sensitive, less critical data, with the application handling potential loss.

- Streaming with control messages: TCP can be used to stream large amounts of data or file sizes (e.g., video), while UDP can send control messages (e.g., commands and status updates).

Get the best of both worlds with AFTP

JSCAPE’s AFTP UDP component maintains fast throughput regardless of network conditions. At the same time, its TCP component takes care of other tasks such as user authentication, file management and the coordination of the file transfers. These combined qualities can be useful in large file transfers over high-latency networks.

AFTP is a key feature of JSCAPE, a managed file transfer solution that supports a wide range of file transfer protocols, including FTP, SFTP, HTTP, AS2, OFTP and many others. JSCAPE is equipped with an array of security and automation features, plus an API, making it capable of supporting any file transfer workflow.

Would you like to witness AFTP in action? Request a quick demo now.